30 Machine Learning Interview Questions & ML Interview Study Guide

Machine Learning Engineers and Data Scientists who focus on ML will find this study guide of the most important topics covered during Machine Learning Interviews useful for their next job hunt. If you're a Data Analyst, this guide probably overkill... you're better of practicing SQL Interview Questions. But for the rest of you hardcore deep-learning fans please enjoy these 30 Machine Learning Interview Questions that were recently asked by companies like Google, Netflix, & Stripe.

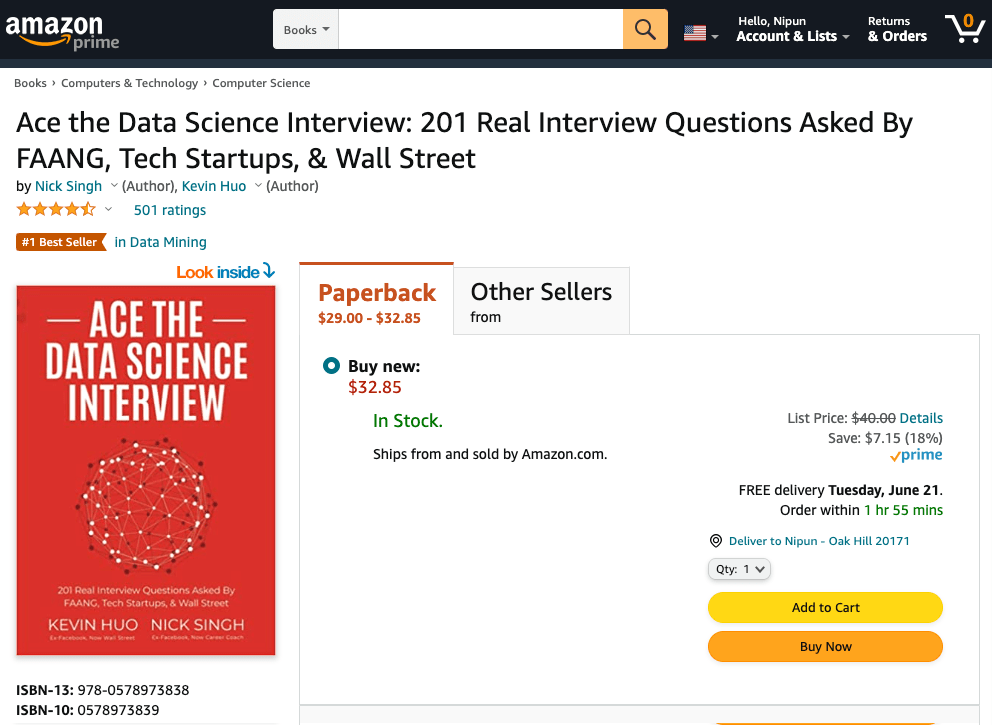

Kevin Huo, an Ex-Facebook Data Scientist who now works at a Hedge Fund, and I, solved 8 of the problems in detail. We left the rest of the problems to be solved in our book, Ace the Data Science Interview (now out on Amazon!).

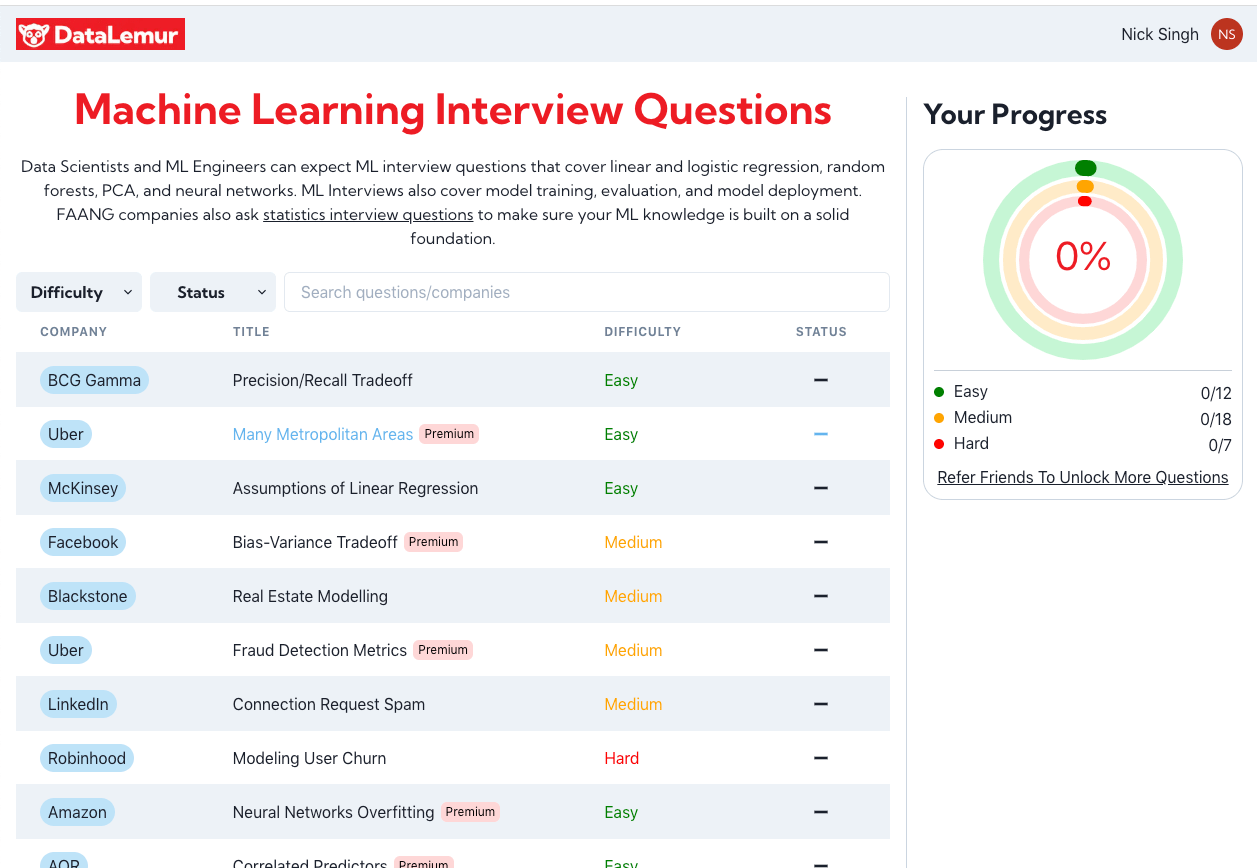

Also, some of the ML questions from this aticle, and our book, can be found for free on DataLemur (which hosts hundreds of Data Science and ML Interview questions).

This guide covers:

- Frequently asked ML topics to review for technical interviews. We briefly cover the math behind ML, linear regression, dimensionality reduction, clustering, and much more.

- 30 Machine Learning Interview Problems

- 8 ML Interview Question Solutions

- How to get more Data Science Interview Questions

Frequently Asked Machine Learning Topics During Technical Interviews

Here's a general overview of areas covered in ML interviews by top tech companies and Wall Street. To deeply learn these topics, check out some of our recommended textbooks, online courses, and bootcamps we featured in our Break Into Data Science Guide. Reading our 40 Probability & Statistics Data Science Interview Questions guide can also be helpful, as that math underpins many of the ML techniques covered below.

Mathematical Prerequisites

Random variables

Random variables are a core topic within probability and statistics, and interviewers are generally looking for an understanding of the principles and basic ability to manipulate them. A random variable is a quantity with an associated probability distribution, and can either be discrete (countable range) or continuous (uncountable range). For a discrete random variable, there is a probability mass function and for a continuous random variable there is a probability density function.

$$\text{ Discrete: } \sum_{x \in X} p(x) = 1, \text{ Continuous: } \int_{-\infty}^{\infty} p(x)dx = 1$$

The cumulative distribution function is given by:

$$F(x) = p(X \leq x)$$

For any given random variable X, it has the following properties (below we assume X is continuous, but the analogous holds for discrete random variables). The expectation (average value) is given by:

$$E[X] = \int_{-\infty}^{\infty} xp(x)dx$$

and the variance is given by:

$$Var(X) = E[(X-E[X])^2] = E[X^2] - E[X^2]$$

For any given random variables X and Y, the covariance, a linear measure of relationship, is defined by:

$$Cov(X,Y) = E[(X-E[X])(Y-E[Y])] = E[XY] - E[X]E[Y]$$

and normalization of covariance is the correlation between X and y:

$$\rho(X, Y) = \frac{Cov(X, Y)}{\sqrt{Var(X)Var(Y)}}$$

Probability Distributions

There are many probability distributions, and interviewers generally aren't testing whether you've memorized specific properties on each (although it is helpful to know basics), but more-so that you can apply them to specific situations properly. Because of this, the most commonly discussed one in Data Science Interviews is the Normal distribution, which has many real-life applications. For a single variable the probability density is given by the following, for a mean and variance parameter:

$$p(x|\mu, \sigma^2) = \frac{1}{\sqrt{2\pi\sigma^2}}\exp{-\left(\frac{(x-\mu)^2}{2\sigma^2}\right)}$$

For fitting parameters, there are two general methods. In maximum likelihood estimation (MLE) the goal is estimate the most likely parameters given a likelihood function:

$$\theta_{MLE} = \argmax_{\theta} L(\theta), \text{ where } L(\theta) = p(x_1,...x_n|\theta)$$

Since the values of X are assumed to be i.i.d, then the likelihood function becomes:

$$L(\theta) = \prod_{i=1}^{n}p(x_i|\theta)$$

It is convenient to take logs (since log is a monotonically increasing function, maximizing the log-likelihood is equivalent to maximizing the likelihood):

$$\log L(\theta) = \sum_{i=1}^{n}\log p(x_i|\theta)$$

Another way of fitting parameters is through maximum a posteriori estimation (MAP), which assumes a prior distribution.

$$\theta_{MAP} = \argmax_{\theta} p(\theta)p(x_1...x_n|\theta)$$

where the similar log-likelihood from before applies.

While the Normal distribution is commonly asked about in interviews, knowing the details and use cases for other well-known distributions like Uniform, Poisson, Binomial, & Geometric distribution is important. It never hurts being able to do the derivations for expectation, variance, or other higher moments.

Linear Algebra

Generally, interviewers won't expect you to delve deeply into linear algebra unless there are specific machine learning emphases. However, it is still helpful to review basics since it helps with the understanding of various algorithms and theoretical underpinnings. There are many sub-topics within linear algebra, but one sub-topic worth discussing briefly is eigenvalues and eigenvectors. Mechanically, for some square matrix A, we have a vector x is an eigenvector of A if:

$$Ax = \lambda x$$

Since a matrix is a linear transformation, eigenvectors are cases whereby the resulting transformation of the matrix on that vector results in the same direction as before, although with some scaling factor (the eigenvalues). There are many real-life use cases of eigenvalues and eigenvectors: for example, identifying the orientation of large datasets (discussed in PCA), or for dynamical systems (how a system oscillates and how quickly it will stabilize).

The decomposition of a square matrix into its eigenvectors is called an eigendecomposition. Note that while not all matrices are square, through Singular Value Decomposition (SVD), every matrix has a decomposition:

$$A = U\Sigma V^T$$

Although the mathematical details are beyond the scope of this discussion, both eigen decomposition and SVD are worth looking into in detail before your technical interview.

Bias-Variance Tradeoff

This is a topic occasionally asked in interviews due to relevance with overfitting and model selection. With any model, we generally are trying to estimate a true underlying:

$$y = f(x) + w$$

through data where w is usually noise that is zero-mean and a Gaussian random variable. As mentioned prior, MLE and MAP are reasonable ways to deduce parameters. To assess how well the model fits, we can decompose the error of y as the following:

1) bias (how well the values come close to the true underlying f(x) values)

2) variance (how much the prediction changes based on training inputs)

3) irreducible error (due to inherently noisy observation processes.

There is a trade-off between bias and variance, and this is a useful framework for thinking about how different models operate. The overall goal is to control overfitting (and not generalizing well out of sample) to produce stable and accurate models.

Linear Regression

This method is one of the most frequently taught methods and has many real-life applications, ranging from predicting housing prices to studying the efficacy of medical trials. Interviewers asking this are generally trying to assess your understanding of the basic formulations and occasionally the relevance of knowing some of the theory to real life applications.

In linear regression, the goal is to estimate y = f(x) of the following form:

$$y = X\beta$$

where X is a matrix of data points and β the vector of weights. In the least-squares context, linear regression minimizes the residual sum-of-squares (RSS), which is given by:

$$RSS(\beta) = (y-X\beta)^T(y-X\beta)$$

In regression, one can use MLE to estimate the β values by using a multivariate Gaussian:

$$Y\sim N(X\beta, \sigma^2I)$$

which leads to results that are the same as minimizing the RSS. For a MAP context, there can be priors for β, of which leads to Ridge Regression, which penalizes the weights to prevent overfitting. In Ridge regression, the objective function becomes minimizing:

$$(y-X\beta)^T(y-X\beta) + \lambda |\beta|_2^2$$

Dimensionality Reduction

Principal Components Analysis

This topic is less common in interviews but is often alluded to during discussions about data-preprocessing or feature engineering. Decomposing data into a smaller set of variables is very useful for summarizing and visualizing data. This overall process is called dimensionality reduction. One common method of dimensionality reduction is Principal Components Analysis (PCA), which reconstructs data into a lower dimensional setting. It looks for a small number of linear combinations of a vector x (say it is p-dimensional) to explain the variance within x. More specifically, we want to find the vector w of weights such that we can define the following linear combination:

$$y_i = w_i^Tx = \sum_{j=1}^{p}w_{ij}x_j$$

subject to the following:

$$y_i \text{ is uncorrelated with } y_j, var(y_i) \text{ is maximized}$$

Hence we have the following procedural description where first we find the first component with maximal variance, and then the second that is uncorrelated with the first, and continue this procedure iteratively. The idea is to end with say, k dimension such that

$$y_1, ... y_k \text{ explain the majority of variance, } k << p$$

Using some algebra, the final result is an eigendecomposition of the covariance matrix of X, whereby the first principal component is the eigenvector corresponding to the largest eigenvalue and so on.

Classification

General Framework

Classification is commonly asked during interviews since due to the the abundance of real-life applications. Tech companies love to ask about classifying customers and users into different segments.

The goal of classification is to assign a given data point to one of K classes, instead of a continuous value (as in regression), and there are two types of models. The first is generative which models the joint probability distribution between X and Y. That is, for an input X, we want to classify an arbitrary data point x with the following class label:

$$\hat{y} = \argmax_{k} p(x, Y=k)$$

This joint distribution between X and Y is given by:

$$p(X, Y) = p(Y|X)p(X)$$

and for each given class k we have:

$$p_k(X) = p(X|k) p(k)$$

such that:

$$\hat{y} = \argmax_{k} p(Y=k|x)$$

The result of maximizing the posterior means there will be decision boundaries between classes where the resulting posterior probability is equal.

The second is discriminative, which directly learn a decision boundary by choosing a class that maximizes the posterior probability distribution:

$$\hat{y} = \argmax_{k} p(Y=k|x)$$

So both methods end up choosing a predicted class that maximize the posterior probability distribution; the difference is just in the approach.

Logistic Regression

One of the popular classification algorithms is logistic regression, and is often asked in conjunction with linear regression during interviews as a way to assess basic knowledge on classification algorithms. In logistic regression, we take a linear output and convert it to a probability between 0 and 1 using the sigmoid function:

$$S(x) = \frac{1}{1+e^{-x}}$$

In matrix form, the decision looks like the following, where a 1 is the target class if the output is at least 0.5:

$$P(\hat{Y} = 1|x) = S(w^Tx)$$

The loss function for logistic regression is the log-loss:

$$L(w) = \sum_{i=1}^{n}y_i\log \left(\frac{1}{S(w^Tx)}\right) + (1-y_i)\left(\frac{1}{1-S(w^Tx)}\right)$$

Note that the posterior is being modeled directly and hence logistic regression is a discriminative model.

Linear Discriminant Analysis

Linear Discriminant Analysis (LDA) is not a commonly asked topic during interviews but serves as an interesting topic to know since it is a generative model rather than a discriminative model (which logistic regression was). It assumes that given some class k, the distribution of any data from that class follows a multivariate Gaussian:

$$X|Y=k \sim N(\mu_k, \Sigma_k)$$

Recall from Bayes rule that maximizing the joint probability over labels is equivalent to maximizing the posterior probability, so LDA aims to maximize:

$$\hat{y} = \argmax_{k} p(Y=k|x)$$

Particularly, we have:

$$P(Y=k|x) = \frac{f_k(x)\pi_k}{\sum_{i=1}^{K}f_i(x)\pi_i}$$

where f(x) for each k is the class density function. LDA assumes that densities are multivariate Gaussian, and additionally assumes that the covariance matrix is common among all classes. The resulting decision boundary is linear (and hence the name), as there is also Quadratic Discriminant Analysis where the boundary is quadratic.

Decision Trees

Decision trees and random forests are commonly asked during interviews since they are flexible and often well-performing models in practice. In particular, it helps to have a basic understanding of how both are trained and used, as well as how the feature splits occur (entropy and information gain).

Training Decision Trees

A decision tree is a model that can be represented in a tree fashion whereby at each split, there is a separation based on features, resulting in various leaf nodes whereby there is a result (classification or regression). For this discussion, we will focus on the classification setting. They are trained in a greedy and recursive fashion starting at the root, where the goal is to choose splits that increases the most certainty on which class a particular data point belongs to.

Entropy

The entropy of a random variable Y quantifies the uncertainty of its values, and is given by the following, for a discrete variable Y which takes on k states:

$$H(Y) = -\sum_{i=1}^{k}P(Y=k)\log P(Y=k)$$

For a simple Bernoulli random variable, this quantity is highest when p = 0.5 and lowest when p = 0 or p = 1, which aligns intuitively with the definition since if p = 0 or 1, then there is no uncertainty on the result. Generally, if a random variable has high entropy, then its distribution is closer to a uniform one than a skewed one.

Consider an arbitrary split. We have H(Y) from the beginning training labels, and say we have some feature X that we want to split on. We can characterize the reduction in uncertainty by the information gain, which is given by:

$$IG(Y, X) = H(Y) - H(Y|X)$$

The larger this quantity, the higher the reduction in uncertainty in Y by splitting on X. Therefore, the general process is to assess all features in consideration and choose the feature that maximizes this information gain. Then, recursively continue the process for the two resulting branches.

Random Forests

Typically an individual decision tree may be prone to overfitting, so in practice, usually random forests yield better out-of-sample predictions. A random forest is an ensemble method that utilizes many decision trees and averages the decision from them. It reduces overfitting and correlation between the trees by two methods: 1) bagging (bootstrap aggregation), whereby some m < n (where n is the total number of) data points are arbitrarily sampled with replacement and used as the training set, 2) a random subset of the features are considered at each split (to prevent always splitting on any particular feature).

Clustering

Clustering is a popular interview topic since there are many real life applications. It is often done for data visualization, and can be used to identify outliers that useful in cases like fraud detection. It also helps to have a basic understanding of how the parameters are learned in this context, versus an MLE/MAP approach from prior.

Overview of Clustering

The goal of clustering is to partition a dataset into various clusters looking only at the input features. This is an example of unsupervised learning. Ideally, the clustering has two properties: 1) points within a given cluster are similar to one another (high intra-cluster similarity)

2) points in different clusters are not similar to one another (low inter-cluster similarity).

K-means clustering

K-means clustering partitions data into k clusters and starts by choosing centroids of each of the k clusters arbitrarily. Iteratively, it updates partitions by assigning points to the closest cluster, updating centroids, and repeating until convergence.

Mathematically, K-means solves the following problem by minimizing the following loss function (given points, and centroid values):

$$L = \sum_{j=1}^{k}\sum_{x \in S_j}\mid\mid x_i - \mu_j \mid\mid^2$$

The iterative process continues until the cluster assignment updates does not further the objective function.

Gaussian Mixture Model

A Gaussian Mixture Model (GMM) is a model whereby for any given data point x, we assume that it comes from one of k clusters, each with a particular Gaussian Distribution.

That is, among the K classes we have:

$$p(x) = \sum_{k=1}^K \pi_k N(x\mid \mu_k, \Sigma_k)$$

where the π coefficients are the mixing coefficients on the clusters and are normalized so they sum up to 1. Let θ denote the unknown mean and variance parameters for each of the K classes, along with K the mixing coefficients. Then the likelihood is given by:

$$p(\theta \mid X) = \prod_{i=1}^{n} p(x) = \prod_{i=i}^{n} \sum_{k=1}^K \pi_k N(x\mid \mu_k, \Sigma_k)$$

and therefore the log-likelihood is:

$$\log p(\theta \mid X) = \sum_{i=i}^{n} \log \sum_{k=1}^K \pi_k N(x\mid \mu_k, \Sigma_k)$$

The parameters can be calculated iteratively used Expectation-Maximization (EM) which is discussed below.

Expectation Maximization

Expectation Maximization (EM) is a method to estimate parameters for latent variables, such as the two examples of K-means and GMMs above, whereby some variables can be observed directly, whereas others are latent and cannot be observed directly. In particular, for clustering, the cluster assignment is the latent variable since that is not directly observed. The general steps are as follows, using Z as the latent variables, X as the observed variables, and unknown parameters θ. Assume the current parameters are given by: θ'. The first step is to estimate:

$$p(Z|X, \theta')$$

using the current parameter estimates. The second step is to estimate the most likely θ* that maximizes the log-likelihood of the data, which is given by:

$$\sum_{Z}p(Z\mid X, \theta')\log p(X, Z\mid \theta)$$

And continue iteratively until convergence.

30 Machine Learning Interview Problems

18 Medium Difficulty ML Interview Questions

1. (Robinhood) What is user churn, and how can you build a model to predict whether a user will churn? What features would you include in the model and how do you assess importance?

2. (Affirm) Assume we have a classifier that produces a score between 0 and 1 for the probability of a particular loan application being fraudulent. In this scenario: a) what are false positives, b) what are false negatives, and c) what are the trade-offs between them in terms of dollars and how should the model be weighted accordingly?

3. (Uber) Say you need to produce a binary classifier for fraud detection. What metrics would you look at, how is each defined, and what is the interpretation of each one?

4. (Google) Say you are given a very large corpus of words. How would you identify synonyms?

5. (Airbnb) Say you are running a simple logistic regression on a problem but find the results to be unsatisfactory. What are some ways you might improve your model or what other models might you look into?

6. (Amazon) Describe both generative and discriminative models and give an example of each?

7. (Affirm) Assume we have some credit model, which has accurately calibrated (up to some error) score of how credit-worthy any individual person is. For example, if the model’s estimate is 92% then we can assume the actual score is between 91 and 93. If we take 92 as a score cutoff and deem everyone above that score as credit-worthy, are we over-estimating or underestimating the actual population’s credit score?

8. (Microsoft) What is the bias-variance tradeoff? How is it expressed using an equation?

9. (Uber) Define the cross validation process. What is the motivation behind using it?

10. (Airbnb) Say you are modeling the yearly revenue of new listings. What kinds of features would you use? What data processing steps need to be taken, and what kind of model would run?

11. (Stitch Fix) How would you build a model to calculate a customer's propensity to buy a particular item? What are some pros and cons of your approach?

12. (Uber) What is L1 and L2 regularization? What are the differences between the two?

13. (Amazon) Define what it means for a function to be convex. What is an example of a machine learning algorithm that is not convex and describe why that is so?

14. (Affirm) Assume we have a classifier that produces a score between 0 and 1 for the probability of a particular loan application behind a fraud. Say that for each application’s score, we take the square root of that score. How would the ROC curve change? If it doesn’t change, what kinds of functions would change the curve?

15. (Amazon) Describe gradient descent and the motivations behind stochastic gradient descent?

16. (Microsoft) Explain what Information Gain and Entropy are in a Decision Tree?

17. (Airbnb) Say you are tasked with producing a model that can recommend similar listings to an Airbnb user when they are looking at any given listing. What kind of model would you use, what data is needed for that model, and how would you evaluate the model?

12 Hard ML Interview Questions

18. (Microsoft) Describe the idea behind boosting. Give an example of one method and describe one advantage and disadvantage it has?

19. (Google) Say we are running a probabilistic linear regression which does a good job modeling the underlying relationship between some y and x. Now assume all inputs have some noise ε added, which is independent of the training data. What is the new objective function? How do you compute it?

20. (Netflix) What is the loss function used in k-means clustering for k clusters and n sample points? Compute the update formula using 1) batch gradient descent, 2) stochastic gradient descent for the cluster mean for cluster k using a learning rate ε.

21. (Tesla) You're working with several sensors that are designed to predict a particular energy consumption metric on a vehicle. Using the outputs of the sensors, you build a linear regression model to make the prediction. There are many sensors, and several of the sensors are prone to complete failure. What are some cost functions you might consider, and which would you decide to minimize in this scenario?

22. (Stripe) Say we are using a Gaussian Mixture Model (GMM) for anomaly detection on fraudulent transactions to classify incoming transactions into K classes. Describe the model setup formulaically and how to evaluate the posterior probabilities and log likelihood. How can we determine if a new transaction should be deemed fraudulent?

23. (Netflix) What is Expectation-Maximization and when is it useful? Describe the setup algorithmically with formulas.

24. (Opendoor) Describe the setup and assumptions of using linear discriminant analysis (LDA). Show mathematically that the decision boundaries are linear.

25. (Microsoft) Formulate the background behind an SVM, and show the optimization problem it aims to solve.

26. (Netflix) Describe entropy in the context of machine learning, and show mathematically how to maximize it assuming N states.

27. (Airbnb) Suppose you are running a linear regression and model the error terms as being normally distributed. Show that in this setup, maximizing the likelihood of the data is equivalent to minimizing the sum of squared residuals.

28. (Stripe) Describe the model formulation behind logistic regression. How do you maximize the log-likelihood of a given model (using the two-class case)?

29. (Netflix) Say X is a univariate Gaussian random variable. What is the entropy of X?

30. (Uber) Describe the idea behind PCA and go through its formulation and derivation in matrix form. Go through the procedural description and solve the constrained maximization.

If your hungry for more problems, check out the 40 Prob & Stat Interview problems, and our free 9-day Data Science Interview crash course.

8 Machine Learning Interview Solutions

Problem #3 Solution:

Some main important ones are precision, recall, and the AUC of the ROC curve. Let us define TP as a true positive, FP as a false positive, TN as a true negative, and FN as a false negative.

Precision is defined by TP / (TP + FP). It answers the question “what percent of fraudulent predictions were correct?” and is important to maximize since you want your classifier to be as correct as possible when it identifies a transaction as fraudulent.

Recall is defined by TP / (TP + FN). It answers the question “what percent of fraudulent cases were caught?” and is important to maximize since you want your classifier to have caught as many of the fraudulent cases as possible.

AUC of the ROC curve is defined by the area under an ROC curve, which is constructed by plotting the true positive rate, TP / (TP + FN) versus the false positive rate, FP / (FP + TN) for various thresholds, which determines the label of fraudulent or not fraudulent. The area under this curve, or AUC, is a measure of separability of the model, and the closer to 1 it is, the higher the measure. It answers the question “is my classifier able to discriminate between fraud and not-fraud effectively” and is important to maximize since you want your classifier to separate the two classes accordingly.

Problem #8 Solution:

The equation this tradeoff is expressed in is given by the following: Total model error = Bias + Variance + Irreducible error. Flexible models have low bias and high variance, whereas more rigid models have high bias and low variance.

The bias term comes from the error when a model is under-fitting data. Having a high bias means that the machine learning model is too simple and does not capture the relationship between the features and the target. An example would be using linear regression when the underlying relationship is nonlinear.

The variance term comes from the error when a model is overfitting data. Having a high variance means that a model is susceptible to changes in training data, which means it is capturing too much noise. An example would be using a very complex neural network where the true underlying relationship between the features and the target is linear.

The irreducible term comes from the error that cannot be addressed directly by the model, such as from the noise in measurements of data.

Problem #12 Solution:

L1 and L2 regularization are both methods of regularization that attempt to prevent overfitting in machine learning. For a regular regression model assume the loss function is given by L. L1 adds the absolute value of the coefficients as a penalty term, whereas L2 adds the squared magnitude of the coefficients as a penalty term.

The loss function for the two are:

$$Loss(L_1) = L + \lambda |w_i| $$

$$Loss(L_2) = L + \lambda |w_i^2| $$

Where the loss function L is the sum of errors squared, given by the following, where f(x) is the model of interest, for example, linear regression with p predictors:

$$L = \sum_{i=1}^{n} (y_i - f(x_i))^2 = \sum_{i=1}^{n} (y_i - \sum_{j=1}^{p}(x_{ij}w_j) )^2 \space \text{for linear regression}$$

If we run gradient descent on the weights w, we find that L1 regularization will force any weight closer to 0, irrespective of its magnitude, whereas, for the L2 regularization, the rate at which the weight goes towards 0 becomes slower as the rate goes towards 0. Because of this, L1 is more likely to “zero” out particular weights, and hence removing certain features from the model completely, leading to more sparse models.

Problem #15 Solution:

Gradient descent is an algorithm that takes small steps in the direction of steepest descent for a particular objective function. Say we have the function f() and are currently at some point x at time t. Then gradient descent will update x as follows until convergence:

$$x^{t+1} = x^{t} -\alpha_t\nabla f(x^{t})$$

that is, we calculate the negative of the gradient of f and scale that by some constant, and move in that direction each iteration.

Since many loss functions are decomposable into the sum of individual functions, then the gradient step overall can be broken down into adding separate gradients. However, for very large datasets this can be computationally intensive, and the algorithm might get stuck at local minima or saddle points.

Therefore, we can use stochastic gradient descent (SGD) in which we get an unbiased estimate of the true gradient without going through all data points by uniformly selecting a point at random and doing a gradient update there.

The estimate is unbiased since we have:

$$\nabla f(x) = \frac{1}{n}\sum_{i=1}^{n}\nabla f_i(x)$$

and since data is assumed to be i.i.d, then for the SGD g(x) in expectation:

$$E[g(x)] = \nabla f(x)$$

19. Recall the objective function for linear regression where x is set of input vectors and w are the weights:

$$L(w) = E[(w^Tx-y)^2]$$

Assume that the noise added is Gaussian as follows:

$$\epsilon \sim N(0, \lambda I)$$

Then the new objective function is given by:

$$L(w) = E[(w^T(x + \epsilon)-y)^2]$$

To compute it, we simplify:

$$L'(w) = E[(w^T x -y + w^T\epsilon)^2]$$

$$L'(w) = E[(w^T x - y)^2 + 2(w^Tx-y)w^T\epsilon +w^T\epsilon \epsilon^Tw]$$

$$L'(w) = E[(w^T x - y)^2] + E[2(w^Tx-y)w^T\epsilon] + E[w^T\epsilon \epsilon^Tw]$$

We know that the expectation for ε is 0 so the middle term becomes 0 and we are left with:

$$L'(w) = L(w) + 0 + w^TE[\epsilon \epsilon^T]w$$

The last term can be simplified as:

$$L'(w) = L(w) + w^T\lambda Iw$$

And therefore the objective function simplifies to that of L2-regularization:

$$L'(w) = L(w) + \lambda||w||^2$$

Problem #21 Solution:

There are two potential cost functions here, one using the L1 norm and the other using the L2 norm. Below are two basic cost functions using an L1 and L2 norm respectively:

$$J(w) = ||Xw-y||$$

$$J(w) = |Xw-y|^2$$

It would be more sensible to use an L1 norm in this case since the L1 norm penalizes the outliers harder and thus gives less weight to the complete failures than the L2 norm does.

Additionally, it would be prudent to involve a regularization term to account for noise. If we assume that the noise added to each sensor uniformly as follows:

$$\epsilon \sim N(0, \lambda I)$$

then using traditional L2 regularization, we would have the cost function:

$$J(w) = ||Xw-y|| + \lambda||w||^2$$

However, given the fact that there are many sensors (and a broad range of how useful they are), we could instead assume that noise is added by:

$$\epsilon \sim N(0, \lambda D)$$

where each diagonal term in the matrix D represents the error term used for each sensor (and hence penalizing certain sensors more than others). Then our final cost function is given by:

$$J(w) = ||Xw-y|| + \lambda w^TDw$$

Problem #25 Solution:

The goal of an SVM is to form a hyperplane that linearly separates the given training data. Specifically it aims to maximize the margin, which is the minimum distance from the decision boundary to any training point. The points closest to the hyperplane are called the support vectors.

Mathematically, the hyperplane is given by the following, for some constant c:

$$H = \{h: w^Th = c \}$$

Now consider some arbitrary point x_i that is not on the hyperplane. The distance from x_i to H is the length of the projection from x_i-h to the vector perpendicular to H:

$$d = \frac{|w^T(x_i-h)|}{||w||_2} = \frac{|w^Tx_i-c|}{||w||_2}$$

To get the actual classification signs (positive vs. negative), we can multiply this distance by the sign on each y_i:

$$y_i*\frac{(w^Tx_i-c)}{||w||_2}$$

Assuming a margin of size m, then the optimization problem is to:

$$\max m \text{ s.t. } y_i*\frac{(w^Tx_i-c)}{||w||_2} \geq m$$

To ensure uniqueness we can set a constraint on m:

$$m = \frac{1}{||w||_2}$$

And therefore the final optimization problem is:

$$\max \frac{1}{||w||_2} \text{ s.t. } y_i*(w^Tx_i-c) \geq 1$$

Problem #29 Solution:

We have:

$$X \sim N(\mu, \sigma^2)$$

and entropy for a continuous random variable is given by:

$$H(x) = -\int_{-\}^{\infty}p(x)\log p(x) dx$$

For a Gaussian we have:

$$p(x) = \frac{1}{\sigma\sqrt{2\pi}}e^{-\frac{(x-\mu)}{2\sigma^2}}$$

Plugging into the above yields:

$$H(x) = -\int_{-\infty}^{\infty}p(x)\log \sigma\sqrt{2\pi} dx -\int_{-\infty}^{\infty}p(x)(-\frac{(x-\mu)}{2\sigma^2}) dx$$

The first term is equal to

$$-\log \sigma\sqrt{2\pi}\int_{-\infty}^{\infty}p(x) dx = -\log \sigma\sqrt{2\pi}$$

since the integral evaluates to 1 (by definition of probability density). The second term is given by:

$$\frac{1}{2\sigma^2}\int_{-\infty}^{\infty}p(x)(x-\mu)^2 dx = \frac{\sigma^2}{2\sigma^2} = \frac{1}{2}$$

since the inner term is the expression for the variance. Therefore the entropy is:

$$H(x) = \frac{1}{2}-\log \sigma\sqrt{2\pi}$$

Where To Get More Data Science Interview Questions

Want more like this? Go buy Ace the Data Science Interview on Amazon Prime and practice Machine Learning Interview Questions on DataLemur!

You'll also enjoy this list of the best books for Data Scientists, which features 3 of our favorite Machine Learning books.